Automated analysis of archaeological wood images

Context. Within the framework of the Arch-AI-Story project, a collaboration between the MSI and the CEPAM laboratory (GReNES team) has been initiated in September 2021. Its aim: to exploit the potentialities of A.I. for the identification of archaeological charcoal, via SEM (Scanning Electron Microscope) images. While at present this identification is done on a case-by-case basis by experts and thus continues to rely primarily on botanical knowledge, advanced deep learning techniques could support anthracologists for:

- the identification of incomplete or damaged specimens;

- the identification of specimens from stands with high intraspecific variability;

- a reduction of the time/costs related to identification.

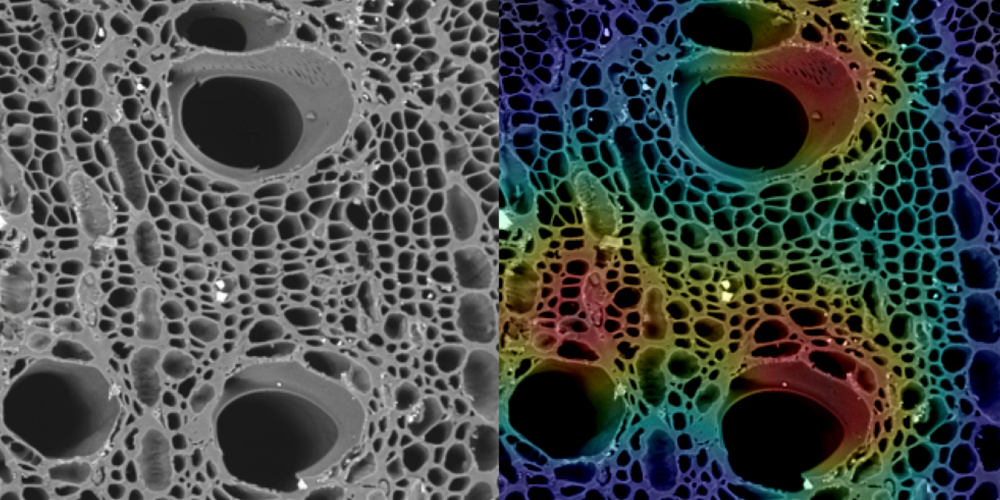

Learning. In a context of supervised classification, Transfer Learning approaches are implemented to better exploit the capabilities of convolutional networks (CNNs) in the context of small/medium size data sets. The goal of this phase is to provide experts with a support tool as well as to extend the identification to very diverse taxa. It is thus crucial to dispose of, in parallel to CNNs, algorithms to explain and interpret the results. In the context of responsible artificial intelligence [1], interpretation will be achieved either through external gradient-based tools (e.g. [2]-[3], see Figure 1) or by introducing architectural changes based on comparison with prototypes ([4]).

Figure 1. Charcoal image. Original on the left (by Elysandre Puech). Overlaid heatmap on the right (by Marco Corneli), highlighting the regions used by the CNN for classification.

IT Infrastructure. The MSI funded the purchase of an HPE server, equipped with an NVIDIA Quadro RTX 8000 graphics card. The server, located at CEPAM laboratory, is entirely dedicated to this project as well as to the storage of a database of charcoal being collected by CEPAM.

[1] Rudin, Cynthia. 2019. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nature Machine Intelligence 1(5):206–15.

[2] Selvaraju, Ramprasaath R., et al. Grad-cam: Visual explanations from deep networks via gradient-based localization. Proceedings of the IEEE international conference on computer vision. 2017.

[3] S. Srinivas and F. Fleuret. Full-Gradient Representation for Neural Network Visualization. In Proceedings of the international conference on Neural Information Processing Systems (NeurIPS), pages 4126–4135, 2019.

[4] Chen, Chaofan, et al. This looks like that: deep learning for interpretable image recognition. Advances in neural information processing systems 32 (2019).